If you are a business person wondering why you should invest in DevOps / MLOps, this is your guide in terms of real live money. We'll try to keep this as non-technical as possible. The technical terms we mention are because they are ABSOLUTELY essential and should have budget allocated to them.

Be sure to read to the end for instructions on how to execute an analysis of expected gains from infrastructure changes required for MLOps in your organisation.

Caveat: this is a work in progress. Expect updates.

Motivation

As a business person you have probably been traumatised by the experience of listening to a bunch of geeks fight over some infrastructure change, finally decide on something, move forward with the project, and have it crash and burn in a fiery ball of lateness. You know that infrastructure change can be costly in terms of time, money, man-hours, opportunity cost, time to market delays, etc. So with the latest buzzword of MLOps floating around the business-o-sphere, how should you go about evaluating it?

That is what this post is for. We will present a framework that you can plug real numbers into in order to make your estimate. We will break down the types of work that Data Scientists and Data Engineers spend their time on, which parts are optimised by certain tools, and what becomes possible as a result.

Assumptions

How do you know this article applies to you?

It applies if you are not Google, Amazon, the U.S. Government, etc. Their needs are different as information is their core business and/or sheer size. Let's not use something just because Google does... your business is probably different from theirs. Pretty much everyone else this should apply to.

It especially applies if you have legacy systems such as Oracle, SAS, SAP, Microsoft SQL Server, in-house infrastructure, etc. You can find out if this is you by surveying your technical people.

The Scenario

You are in a leadership position without deep knowledge of MLOps, Data Science, or Data Engineering. Your technical workers are advocating for an infrastructure change to allow for MLOps. You are faced with a decision that will require significant budget, planning and time. You view it as risky. You are having a hard time understanding what exactly is being asked for and what the expected benefits are.

Read this, take it to your technical people, and ask them to pose their request in terms of this framework.

The Framework

So how the heck do those nerds actually spend their time? They are usually pretty bad at explaining it without putting you to sleep with acronyms and other smart sounding things so it's totally understandable if you're not sure. Let's try to rectify that. We'll present a few different types of work to be done using business language.

Type 1: One-off assessment

These are costs that you experience once at the beginning of the project. They might be significant but will be averaged out as long as the project results in success. This cost essentially depends on two things:

- How efficiently is your business set up in the first place.

- How well do your technical workers know the technology.

For (1) you can think in terms of the following questions: can people communicate? Are things documented? Are people over-worked or do they have space to think? Does it require 10 meetings to get a simple task done? It is important to be honest here but you can be sure that your technical people will tell you exactly how it is. Don't come up with this yourself, ask them. You won't be able to do anything to change this in the short term so just take it as fact and move on with the assessment.

For (2) you will need to add some calendar weeks or months of exploration time if your technical people don't know the technology you are migrating toward. You'll also need to budget more time for things to go wrong because, for sure, they won't get it right the first time they are using it. This can be the biggest hurdle because it could easily result in months of delay and you start getting hit by opportunity costs as your engineers will be spending less time on the roadmap the longer it drags on.

As mentioned, these costs will be amortised out over time as long as the project is a success.

Type 2: Monthly costs

These are the scary ones as they have no end in sight. We all know the difference between a $100 per person one-off cost compared to a few years of a $10 per person per month subscription. $100 is a lot more than $10. In fact it is 10 times more. However, in less than a year you've broken even. In two years you have paid more than double. Then you add a few more people and now you're paying $30/month. Before you know it your costs have taken on a life of their own and you cannot change because you are dependent on it.

Choosing good MLOps is choosing to pay the one-off price rather than the subscription for your team. So let's look at the different types of these costs.

Manual tasks

When a technical worker cannot automate a part of their job, they must do manual work. Manual work is the death of a technical worker's soul. Plus it takes away the time from other, more productive tasks. Plus they can interrupt you. Even very seemingly small things like a weekly 20 minute manual task can easily pile up if they are not given the tooling to automate them. Before you know it, you are spending a part time job amount of time executing manual tasks. The solution is to automate them away using MLOps.

Mechanical time

When a DS or DE hits a button to process some data or train a model, it needs to be fast.

DS and DE are different than application development. When application developers need to test a piece of functionality you can usually spin up an entire environment on your own machine and test it as a whole. With DE this is a bit different because the operations themselves can take much longer because processing large amounts of data requires more computing power. So it actually becomes really important for the data processing operations to be FAST.

You might think: well if they are slow, the engineer can just work on something else in the meantime, right? This is true to some extent but breaks down pretty quickly. There is a difference between a maker's schedule and a manager's schedule. Engineers and scientists require longer amounts of unbroken time to do their work. The longer they have to wait for something to run, the more it breaks their train of thought. Since their tasks require the engineer to keep lots of complex moving parts in their head at a time, these context switches are an absolute killer for productivity. If they are results oriented, they may decide to move to an environment in which they are empowered to ship things to production faster.

So we need to take into account how long it takes for an engineer to run an operation.

Non-technical engineer hours

No matter how technical of a worker you are, if you are working in industry you are having meetings. For reasons mentioned above, you should strive to have as few meetings as possible without sacrificing quality. One of the mantras of DevOps (and thus MLOps) is self-service. The technical worker should own their own deployments. When the technical worker owns their deployments, they have less meetings with other teams to achieve the same outcome. This is good.

The less MLOps you have, the more meetings you have. The more meetings you have, the less your technical workers can ship.

Technical engineering hours

This one innocent looking section in the middle of this blog post is what many people think is the entire job description. It is important for sure but it is only one part and it is affected by the quality of tools that you have. This section is a question of how many technical tasks can a technical worker complete given that they can get an unbroken stream of time to work on it.

This essentially boils down to the feature completeness of your infrastructure. The more features it has that you need, the fewer things that your engineers will need to develop themselves.

Example: Setting up a Spark cluster in-house and on-prem for the first time can take months whereas getting access to the same functionality through DataBricks can be achieved in an afternoon.

Employee turnover

The worse your tooling, the more turnover you have. Many technical workers are motivated by learning. Others are more outcome oriented and like to deliver. In both cases, an absence of MLOps may cause your Data Scientists / Data Engineers to become bored and frustrated with their lack of ability to ship. It is well studied that the turnover costs associated with technical workers can result in non-trivial delays, human hours, and actual costs. Depending on the culture of your organisation, you may or may not be able to mitigate this so be sure to be honest with yourself.

Time to market opportunity cost

The longer a project sits in the pipeline the higher your opportunity cost. If you are a large organisation with many customers, a single ML model might represent 6 or 7 figures in yearly revenue. There are two factors that you should take into account with your technical workers and they are:

- How long does it take to develop and release a model

- How many projects can a single technical worker maintain in production

(1) is obvious but (2) generally gets less consideration. Each time a model or dashboard is put into production, everything that went into creating it must be maintained. The models must be re-trained. The ETLs must be re-run on an hourly, daily, weekly, or monthly basis. The less MLOps that you have, the more time the technical worker must spend executing manual tasks that they should be able to automate.

We regularly find that improved tooling can literally double (or more) the number of projects a single technical worker can maintain in production. So yeah, you can double the ROI on your technical people's salaries with the right tooling.

The tech

The optimal environment that allows for technical workers to deploy models and data pipelines has (among others) the following characteristics:

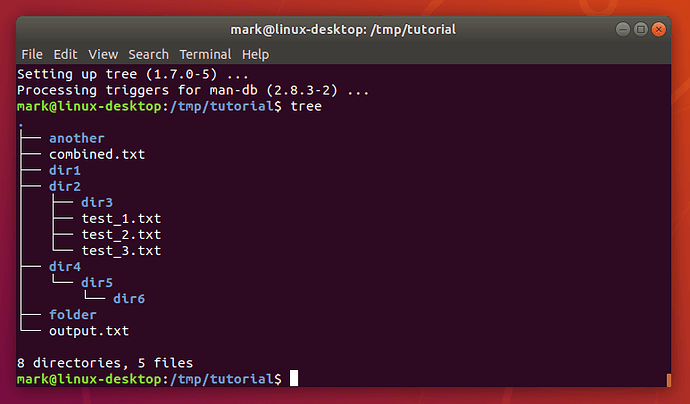

Everything must be runnable via a command. A command is a string of characters that represents a button you can push. We all know from the movies that a command line looks something like this:

Every button that you push on a computer should have a corresponding command. If it does not have a corresponding command it means you are locked into something called a graphical user interface (GUI). A GUI requires a human operating a mouse and clicking on it to run. If something requires a human clicking a mouse then you will never be able to automate it away. If you can run it as a command then it can be included in things called CI/CD pipelines which are the primary method of automation in the world of DevOps and MLOps.

Command lines available in a linux or unix environment are the most feature complete, well-studied, and robust so this is what DareData recommends. If you are a windows shop then this task is made more difficult but PowerShell does exist so there is a path.

Model and data versioning is a must. Unlike most other things in MLOps this one does not have a direct parallel in traditional DevOps. This is because data and models have different characteristics than anything in the application world because of their size and nature. There is one set of tools used to track versions of your code but you need a different set of tools to track versions of your data and models. Tools like MLFlow, DVC, and github lfs tackle these needs.

ETL automation and scheduling. Running ETLs that process data to prepare it for modeling or dashboarding requires tooling. You can't just run a complex ETL on a simple schedule. You need to have it retry if something goes wrong. You need access to organised logs to debug it. You may need to coordinate data flow between multiple systems with arbitrary triggers. You may need some workflows to depend on others. You may need to perform arbitrary logic in the middle of it which requires the flexibility of an open source programming language. There's enough needs that usage of tools like digdag or airflow is absolutely essential. Without it you significantly increase the maintenance hours of a deployment, resulting in soul-destroying manual tasks.

CI/CD pipelines are the glue that makes it all possible. What we are trying to achieve at the end of the day is the ability for your technical workers to "push" new code to a repo and have it automatically checked for errors and deployed to production. CI/CD pipelines are how you do this. We recommend using gitlab for your code versioning as well as CI/CD since it was built from day one with DevOps in mind.

Do the analysis yourself

Take this framework and this spreadsheet to your technical workers and modify / fill it in. This simulator is set up for modeling 3 scenarios:

- Your current scenario which is probably not great which is why you are reading this.

- An optimal scenario where the technical team gets to use every single tool that they want.

- A compromise scenario which is probably more realistic than (2)

The spreadsheet has three sheets:

- Simulation. This is where you fill in numbers of how much time your technical workers will spend on each of the types of tasks outlined in this article.

- Summary. Purely cosmetic, presents the same information in the simulation.

- Maintenance over time. Fill this in with the results of (1) to estimate how many projects a single technical worker can put into production and maintain before they have more maintenance tasks than they can do.

In conclusion

Infrastructure changes are expensive. They also result in huge return on investment if and only if they are done correctly. If done incorrectly the result is huge costs, employee turnover, and you're still stuck with your legacy system. If done correctly there is a huge impact on revenue with a happy and motivated team to accompany it.

However, because the field is so new, doing it correctly is difficult and this is pretty much why DareData exists as a business. Shoot me an email at sam@daredata.engineering to schedule a free one hour strategic consultation.