LLMOps will definitely be a game changer for the future.

Have you heard of MLOps?

MLOps (Machine Learning Operations) is a set of practices and tools that combines machine learning, software engineering, and DevOps principles to scale and automate the end-to-end lifecycle of machine learning models: from development and training to deployment, monitoring, and maintenance in production.

Its goal is to enable faster experimentation, reproducibility, scalability, and reliable delivery of ML models, while ensuring collaboration between data scientists, engineers, and operations teams.

But as large language models (LLMs) move from research labs to real-world products, we’re entering a new age of LLMOps.

LLMOps is about managing the entire lifecycle of applications powered by LLMs: from prompt engineering to fine-tuning, evaluation, deployment, monitoring, and continuous improvement.

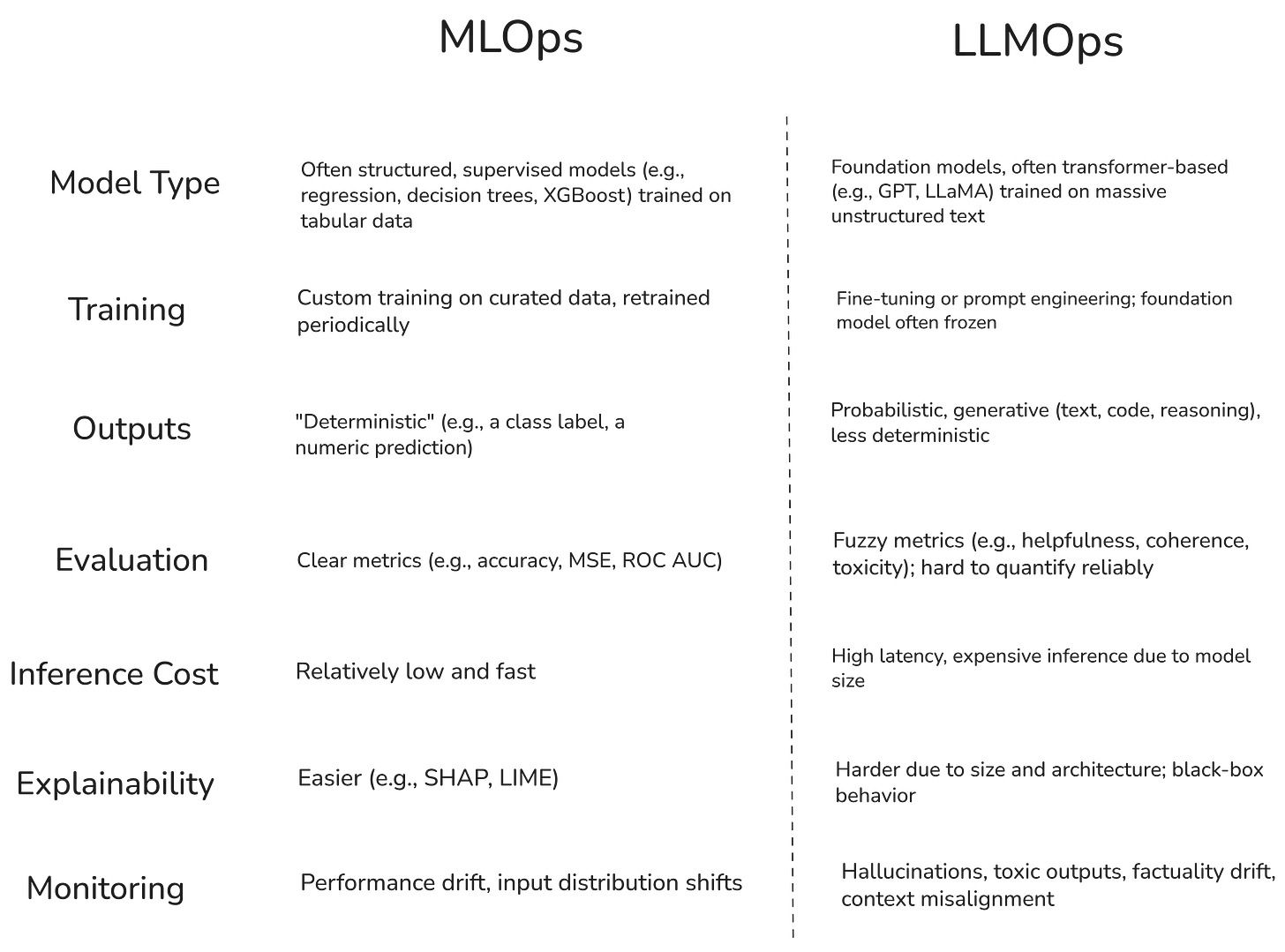

But how different is MLOps from LLMOps? The summary below lifts the veil a bit:

LLMOps is not just a technical discipline, as well. It’s product thinking meets model management. As LLMs are not like traditional models and they’re less predictable (highly context-dependent), their behavior can drift significantly with small changes in data or prompts. Ensuring they stay reliable, accurate, and safe over time requires new approaches.

Some key elements of LLMOps:

- Prompt management: Tracking and versioning prompts like you would with code.

- Evaluation frameworks: Going beyond accuracy: measuring helpfulness, toxicity, factuality, or using evals.

- Monitoring & feedback loops: Capturing how users interact with the model, spotting issues early.

- Fine-tuning or retrieval updates: Improving model performance on the fly without retraining from scratch.

- Cost & latency control: On one hand Transformers-based models aren’t cheap, on the other users won’t wait 10 seconds for a response.

LLMOps is still a developing field, but it’s becoming a key differentiator for teams building with LLMs in production. It’s where infrastructure meets UX, where AI meets operations.

If you’re shipping anything built on top of LLMs, you should definitely check out the LLMOps concept.

In this blog post, I’ll describe it a bit.

Why LLMs Are Different

Unlike traditional ML models, LLMs are:

- Non-deterministic: The same input can produce slightly different outputs.

- Highly context-dependent: Prompt wording, model temperature, and history affect the result.

- Pretrained at scale: You typically don’t train from scratch (unless you have A LOT of money), you prompt, fine-tune, or adapt.

- Expensive: Each call to a large model costs money and time.

- The hardest: they are difficult to evaluate. There’s no single metric that captures "quality" or "truthfulness."

Because of this, product teams working with LLMs need to rethink how they manage their systems. And this is where LLMOps comes in.

What LLMOps Involves

Here are the core components of a good LLMOps workflow:

1. Prompt Engineering & Versioning

Prompts are the new model weights. Teams need tools to test, iterate, and version them, especially as changes can drastically impact output.

2. Evaluation Beyond Accuracy

LLM outputs aren’t binary (or even multiclass). So long cat vs. dogs predictions.

You need to evaluate:

- Relevance

- Helpfulness

- Toxicity

- Factual accuracy

- Style/tone

Due to the proliferation of B2C AI apps, users are very picky and demanding from AI tools output. LLMOps should include human and automated feedback systems to track these.

3. Monitoring and Observability

How is the model performing in the real world? Are users satisfied? Are there hallucinations? Are costs exploding?

LLMOps introduces structured logging, user feedback loops, and red-teaming tools to surface issues before they become problems.

4. Retrieval-Augmented Generation (RAG)

Most LLM applications rely on bringing in external knowledge through retrieval systems (e.g. vector databases). LLMOps includes maintaining the data pipeline behind these retrieval systems, specially document accuracy, relevance and avoiding contraditory statement - the latter is one of the most difficult things to guarantee in GenAI systems.

5. Fine-tuning and Model Selection

While rare in most apps, some teams go beyond prompting and use fine-tuning or adapter-based methods.

Choosing the right model (open-source vs. API), managing versions, and knowing when to fine-tune is all part of LLMOps. Also, checking if you need managed services vs. cloud deployments should always be considered in the model selection process.

6. Cost and Latency Management

One of the major mistakes in LLM Applications is trying to optimize for performance, while minizing cost and latency. The truth is that the two are, normally, inversely related. Take Deep Research from OpenAI - it’s very accurate, provides great outputs but it’s expensive and takes more time than normal model interaction.

The same is true for every LLM based application. If you want more performance, you should expect more cost / time to inference.

LLMs are also more expensive and slower compared to traditional APIs.

LLMOps can help, of course: setting caching strategies, fallback models, batch inference, and optimizing for both performance and cost.

Who Needs LLMOps?

If you’re:

- Building a chatbot or virtual assistant,

- Integrating LLMs into your SaaS,

- Using RAG to bring company data into answers,

- Deploying summarization or classification pipelines,

.. you should investigate more about LLMOps and how to natively include it in your solution.

It’s not just a concern for ML engineers as it also involves:

- Product managers,

- Prompt engineers,

- Backend engineers,

- UX teams.

Building with LLMs is still a system problem.

Where the Field Is Going

LLMOps is still young, but we’re seeing an ecosystem form around it. New tools are emerging for:

- Prompt/version management (PromptLayer, LlamaIndex, Helicone)

- LLM observability (Langfuse, Arize, WhyLabs)

- Evaluation and feedback (Truera, Humanloop, DareData GenOS)

- Experiment tracking and optimization (Weights & Biases, Ragas)

In the future, we’ll likely see more standardized frameworks for shipping LLM-powered products safely, reliably, and efficiently, just like MLOps did for traditional ML.

Final Thoughts

LLMs are powerful, but unpredictable. Putting them in production is as much about infrastructure, observability, and iteration as it is about clever prompts or model selection.

If you’re building with LLMs, it’s worth thinking about your LLMOps stack early. It may be the difference between a clever demo and a reliable product that is future proof.

DareData’s GenOS has been natively incorporating LLMOps into our tool Gen-OS. If you’re interested to know more, let me know at ivo@daredata.ai