First things first: I do not consider myself a luddite of any kind. Throughout my career, I've worked with technology, pushing organizations towards a sustainable and innovative way of working with data. By luck, I had the chance to receive formal training in Data Science and Machine Learning relatively early in the AI boom and was excited to work on projects that improved people's productivity using data. And I still believe that Artificial Intelligence will help us achieve a better version of society.

Personally, I've always been interested in understanding how society relates with technology. I am still amazed at how major technological and scientific improvements have had a significant impact on our life expectancies (despite ongoing inequality) and access to better education and more opportunities for everyone. On a personal note, I'll never forget the day I had the opportunity to watch Robert Shiller's economic class on Coursera in 2013 - the fact that I was watching a class on Finance and Economics from a Nobel Prize winner using my computer in a small town in Portugal was staggering, amazing and exciting. This is, of course, a trivial example of how technology has impacted society in the last century, but I believe everyone has had a similar experience - where they felt how technological evolution in their lifetime brought amazing advancements and opportunities.

Nevertheless, although I've been interacting, training, and deploying machine learning systems for the past 10 years, that does not mean that I am not anxious about the latest developments in technology, particularly regarding Artificial Intelligence. And I believe this is a feeling shared by a lot of developers and AI leaders.

The news is out there - we're talking about how AI could potentially wipe out around 300 million jobs in the economy. The most anxiety-inducing part? This is just the starting point of the discussion about the impact of AI on society. The implications for jobs may only be the tip of the iceberg in the larger discussion about how society will evolve using artificial intelligence. Large Language Models, such as GPT-4 (known through the conversational tool ChatGPT), are already described as a weak form of Artificial General Intelligence, with all the implications that this may bring to society. Many people have been arguing about the certain implications of these models in our lives, but hear me out: no one has a single clue what will happen in the next 2 or 3 years. Period.

People that are saying that they know how society will look like in 2 or 3 years (yes, that's the time span we should be looking at, right now) are not telling the truth - mainly for two reasons:

- They don't know. I'm not stating this in a condescending way - most people that speak about Large Language Models (LLM's) just like they spoke about AI beating Alpha Go's champions or how statistical models can help predict elections are not formally trained in the fields of data science or machine learning and are curious / enthusiasts of the area. The sheer amount of generalization power (jargon for how it can solve things that it wasn't specifically being trained to do so) of models such as GPT-4 compared with past models is similar to a comparison between a computer and a sheet of paper.

- Financial incentive. People may also be pushing their own agenda (or their organization's agenda), trying to get ahead in the curve. I do believe that trying to be ahead of the curve will be physically impossible to any human (or organization) if the exponential growth thesis is confirmed (more about this, next).

This blog post will mainly cover three topics: exploring the different thesis on where we are right now in the technological evolution curve, what implications it may have for us and how you can participate in the discussion.

Linear vs. Exponential vs. Sigmoid Growth Thesis

If you want to consider where we are in terms of technological evolution, the most important theme is how you think about technological innovation.

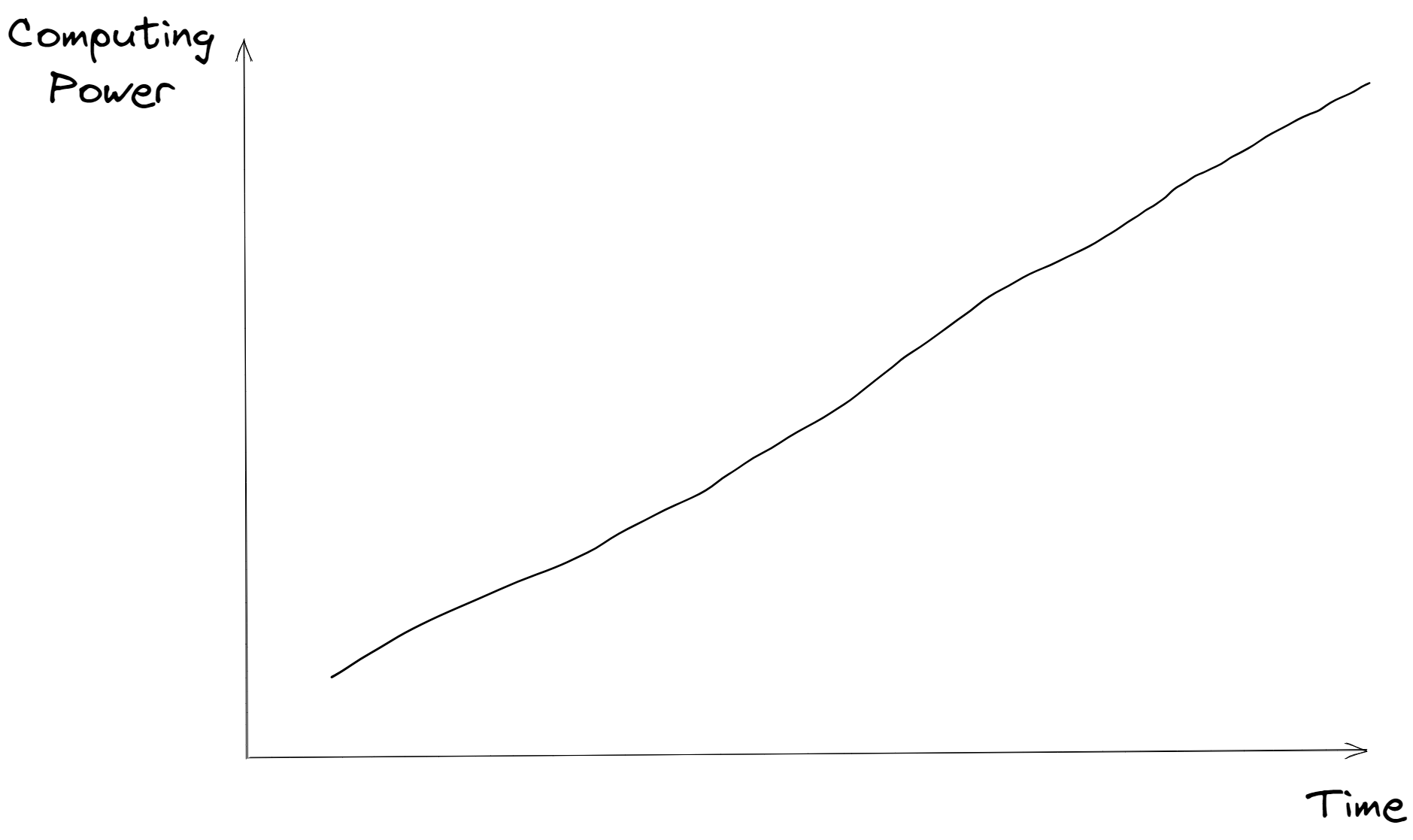

Let's start with the linear hypothesis - which states that technological evolution behaves at the same pace at every point in time. We can use the number of calculations performed per second of an artificial entity (such as a computer or processing unit) can do per second as a proxy for technological innovation. Given recent developments, it's safe to assume that more computing power also translates into more intelligent machines. This variable will be represented by the abstract "computing power" in the following plot:

If technological innovation happened linearly, we would be able to keep up with it, with a few bumps here and there. Our species is highly adaptable and capable of dealing with change, up until a certain rate.

The problem is... the linear hypothesis has been highly discredited.

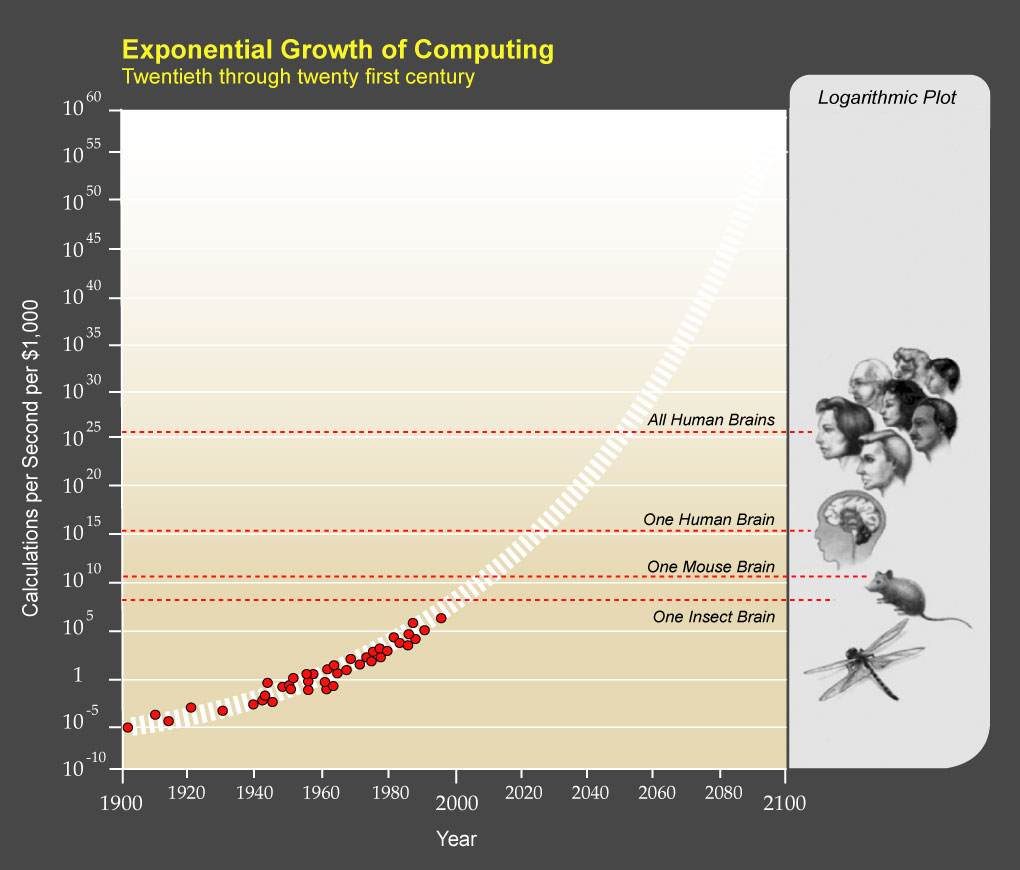

In its place, we have the exponential growth thesis (also known as the accelerating change thesis), popularized by Ray Kurzweil:

With this theory, technology does not evolve linearly but exponentially, which means that the rate of change is picking up fast.

According to Kurzweil's predictions, a computer will be able to simulate one human brain during this decade, and some argue that we are already there with GPT-4, a large language model. By 2050, a computer will be able to simulate all collective knowledge combined.

Indeed, in the beginning of the curve, we see something that was evolving linearly...

... but as soon as we look at the rest of the curve, we see exponential growth, indicating that we are probably closer to an exponential scenario. While processing power is different from machines that can learn, it's interesting to note that current machine learning models are capable of remarkable feats, just by being trained using huge machines. The exponential growth model is the most accepted thesis in academia today.

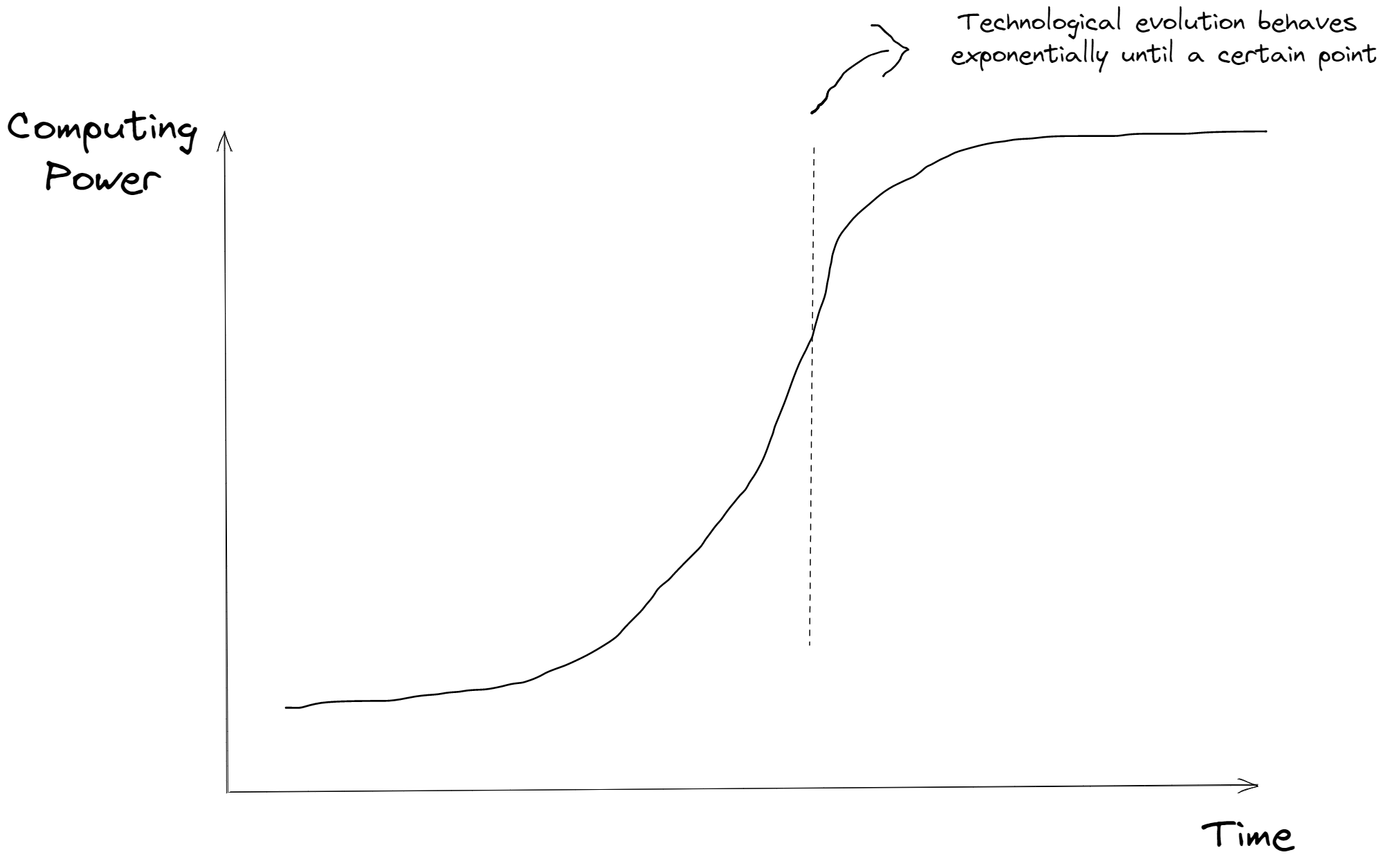

The third hypothesis (that is also unlikely) is the sigmoid growth - meaning that technological growth will behave exponentially until a certain point, hitting a cap:

The sigmoid thesis (this s-shaped curve is called sigmoid) is something that we don't know if it'll happen. There is no evidence that we will hit a plateau, given the data points that we have.

What this Means for Society and why you Should Care

Irrespective of your stance regarding the speed of technological evolution, one thing is certain: with the latest advances in artificial intelligence and the creation of groundbreaking technologies like GPT-4, there will be profound implications for all of us.

If the current pace of development continues, it is unlikely that humans will be able to keep up with the rapid pace of technological innovation, impacting our comprehension of the world, our values system and the way we work, live and interact with each other. And this will probably happen in your lifetime.

There is also a significant risk that these models may start to self-improve, further increasing the gap between humans and machines.

If you value democracy as a system, it is crucial that the deployment and impact of these models is not solely left to technocrats, corporations or discussed only among experts. It is important that everyone understands what is at stake. In fact, it is the only fair way.

I'm not taking a stance on if these outcomes will be positive or negative - I just believe that everyone must understand that they will happen and should have a way to participate in the discussion.

What can you do to start?

- Read a couple of resources about the exponential growth theory: Singularity is Near, Why the Future Doesn't Need Us, SuperIntelligence are good places to start.

- Act on your local politicians to speak about the topic – AI regulation should be on the top of their agendas.

- Act on your local media to speak about the topic - at the moment, artificial intelligence may be as threatening to humankind as climate change or nuclear warfare but it has near 1/100 of the attention in the media.

Artificial intelligence has the potential to transform society in countless ways, taking us to the next step in our society and making our lives easier and more enjoyable. However, this transformative power also brings with it significant risks and challenges, particularly when it comes to issues such as privacy, ethics, and the potential for AI to replace human jobs (and even lives).

It is crucial that as a society, we engage in open and transparent discussions about these issues, and work together to develop policies and regulations that ensure the responsible development and deployment of AI. This includes involving a diverse range of voices in the conversation, including policymakers, industry leaders, academics, and members of the general public. It all starts with you, your vote and your voice.

What we are doing as DareData

This is definitely not a commercial post. I own an Artificial Intelligence consulting company and have a financial incentive to tell you that everything will be alright and AI systems will be all smiles.

But they won't be. There are significant challenges ahead and we need to be aware of them to make informed decisions.

At DareData, we're having a lot of demand for building these types of models at companies and we'll make sure we'll build them in responsibly and the most fair way possible. We'll always be open and transparent of how they work and make sure that they are deployed with safeguards.

Given recent developments, democratizing machine learning is becoming even more important and we're committed to continue doing that, regardless of any commercial objective.