With the rapid advances in technology, it might seem that deploying AI in companies should be relatively straightforward. After all, with tools like ChatGPT or other advanced models that appear to perform like “magic,” how hard can it really be to get results in enterprises and companies?

Many companies still struggle to achieve a solid return on investment (ROI) from their AI initiatives. The issue isn’t access to technology, of course. It’s understanding and managing the nature of AI.

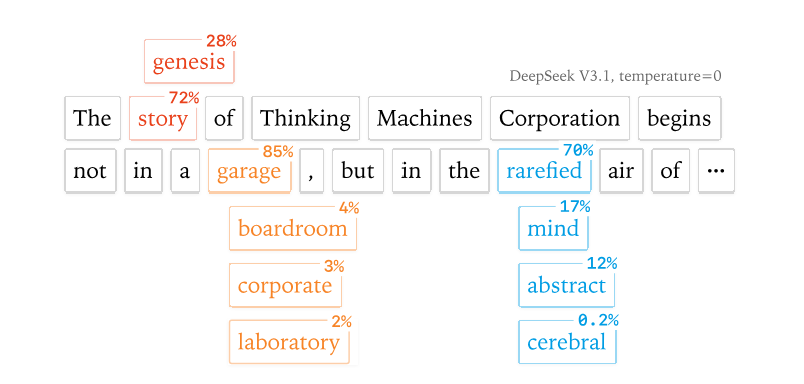

AI systems, particularly those based on machine learning (most AI systems today), are non-deterministic. This means their outputs can vary, even when given the same input. The system’s reasoning involves probabilities, randomness, and complex interactions that make its behavior unpredictable. You can see this with ChatGPT as it rarely gives the exact same answer to the same question twice.

For organizations, this unpredictability creates challenges in reliability, reproducibility, and governance. You can’t treat AI like traditional software - where outputs are fixed and rules are explicit. Instead, you need a framework that controls for non-determinism through consistent data quality, human oversight, model monitoring, and well-defined feedback loops.

Humans save the day

You’ve probably heard that the future of work will be Human + AI. Tasks will be automated, but jobs are made up of many tasks. As automation expands, we’ll see new roles emerge - ones that integrate AI-driven tasks with human judgment. People will step in when AI systems reach their limits or fail to recognize their own mistakes.

Humans and machines will need to work together, not only because it’s the humanistic thing to do, but because it’s operationally necessary. When AI systems reach their limits, you still need people who can reason through ambiguity, understand nuance, and use broader context to make the right call.

And make no mistake: AI will fail. It’s built to predict, not to be perfect. Every prediction carries a degree of uncertainty, and that means some level of error will always be present.

In a business context, those errors can be costly. A wrong decision made by an algorithm can trigger financial loss, reputational damage, or even legal exposure. In extreme cases, it can push a company to the edge of bankruptcy.

That’s why most organizations remain cautious about deploying AI in their most critical processes. A well-designed system must include strong safeguards that keep humans in the loop.

It’s still a (somewhat) Engineering Problem

Non-determinism is an engineering problem - but not one that traditional methods can fully solve. AI systems behave probabilistically yet this doesn’t make engineering less relevant. It makes it more essential than ever. As classical machine learning only gained relevance when data scientists jumped out of notebooks, so will LLMs jump to business results when engineers mitigate the non-deterministic problem.

AI demands a new kind of engineering discipline - one that treats uncertainty as part of the design, not as noise to be eliminated. This “statistics-at-scale” mindset is what allows companies to deploy AI responsibly. Engineering now has to manage randomness, monitor performance drift, and build feedback systems that learn continuously.

For companies, handling non-determinism at scale starts with good engineering fundamentals: reliable data pipelines, contextual integrations, access control, and rigorous testing. But even after those are solved, another layer remains: the new discipline of engineering for reliability around inherently unpredictable systems. Call it “probabilistic scalling”.

Building for Reliability in a Probabilistic World

When companies deploy AI use cases, they often start with models that perform only “good enough.” Early systems operate with limited accuracy and heavy human oversight.

That’s normal: the first goal is not perfection, but stability. A well-designed engineering system learns from its own operations: every human correction, every data feedback, every edge case feeds improvement. Over time, the system should automate more of what humans once handled manually. This is how accuracy compounds - through iteration, monitoring, and disciplined feedback loops rather than one-off model training. And knowing that not every case is automateable.

Adapting to a Changing World

The world doesn’t stand still, and neither can statistical systems.

Market conditions, customer behavior, your enterprise and external data sources evolve constantly - meaning yesterday’s AI model is already outdated.

Reliable AI infrastructures must be prone for post-deployment. When the world shifts , AI systems must incorporate that new information easily. This requires an ecosystem where this principle lives through its ability to sense, adapt, and evolve. When the environment shifts (new behaviors, data patterns, or an enterprise evolving), the system captures that change, interprets it, and adjusts.

The AI of the future will only be safe when organizations are able to adapt as fast as the world changes around them. Deploying AI isn’t about reaching a “final state” or a “perfectly accurate system”, it’s about building a system that learns, corrects, and evolves continuously.

The companies that will thrive are not those with the most advanced models, but those with the strongest post-deployment infrastructure. Systems that monitor, guide, and improve will be the ones to evolve enterprises to the next stage.